Progress report! I took a break from my main project of research, a motion design tool which I’m calling real2d for now, to embark on a mini-journey: with my current skill set, how much of a Photoshop-like program can I implement in a month’s time?

I primarly did this to give myself the freedom to explore ideas of struct/memory layouts I usually wouldn’t think of, since a Photoshop-type program has simpler data structures compared to a program where every user-adjustable parameter can be grown with keyframes to arbitrary sizes. It also serves as a testing ground for some new things I’m trying for the first time, namely saveable files, a fully-wired undo/redo system, and bitmaps that have to be considered as principal memory. Here are some of the highlights of what I did.

Undoing

The thing I spent the most time on was definitely the undo system; literally on the last hour of the night I thought I had got it juust right, I realized the way I was doing it was dumb and that I’d be spending the entire next day redoing it instead of moving onto something new. I took a more low-level approach to undo, where instead of making “undo” and “redo” variations of every function, undo entries can only record two types of “actions:” a swap of data and a shift of data. All that gets stored is the address of what value got changed, the size of the value, the old data if it’s a swap action, and how many bytes it got shifted by if it’s a shift.

struct history_action {

history_action_type Type;

memory_table_list TableName; // Contains address info.

uint64 ByteOffset;

uint64 Size;

uint64 ShiftAmount; // Only for type_shift.

int16 Direction; // Only for type_shift.

};

Originally when I thought of how to make the tree support variable-size storage I decided to encode the information in structless stacks that I had to manually traverse by re-encoding the size and type at the end of the data so I could jump back to the top and read it out, and…

case action_type_storedata:

{

uint64 *ByteSize = (uint64 *)Memory_Rewind(Memory, sizeof(uint64), P_UndoBuffer);

void *DataAddress = *(void **)Memory_Rewind(Memory, sizeof(void *), P_UndoBuffer);

void *DataStart = Memory_Rewind(Memory, *ByteSize, P_UndoBuffer);

DataStart = Memory_Rewind(Memory, sizeof(void *), P_UndoBuffer);

DataStart = Memory_Rewind(Memory, sizeof(uint64), P_UndoBuffer);

} break;

Yeah… It worked for the most part, but to be able to do more complex things without the code getting out of hand, I realized I had to separate the info from the data into fixed-size structs that allowed me to easily gauge the tree’s size or how far the playhead is from the end of the tree. I also switched from storing the actual address to storing an offset into the table the address belongs to (discussed shortly).

history_action Action = { TableName, action_type_shift, Size, ByteOffset, Amount, Direction };

history_info Info = History_GetTreeInfo(History, History->EntryPlayhead - 1);

history_action *Action = &History->Action[Info.ActionCount_Total];

*Action = ActionData;

if (Action->Type == action_type_swap) {

// store data to tree

} else if ..

// other methods of storage

}

There are slightly more complex action types I’ve made to store bitmaps more efficiently, like a type_saveonundo that only saves the data to the tree when the action is undone (so there isn’t a copy of an imported bitmap in the undo tree), but overall I try to keep the amount of knowledge of what the data is to a minimum. There’s already beneficial options from me doing this that wouldn’t be available if I took a higher-level approach, like not having to force selection/deselection to be an action that the user has to undo since an “undo delete layers” function would need to know what layers were selected. Of course there are places where this model doesn’t work and you need to know more about the data, like in the type_swap_bitmap function which has to know the data’s bytes per pixel or a a callback to destroy an FFmpeg context if we’re using that library.

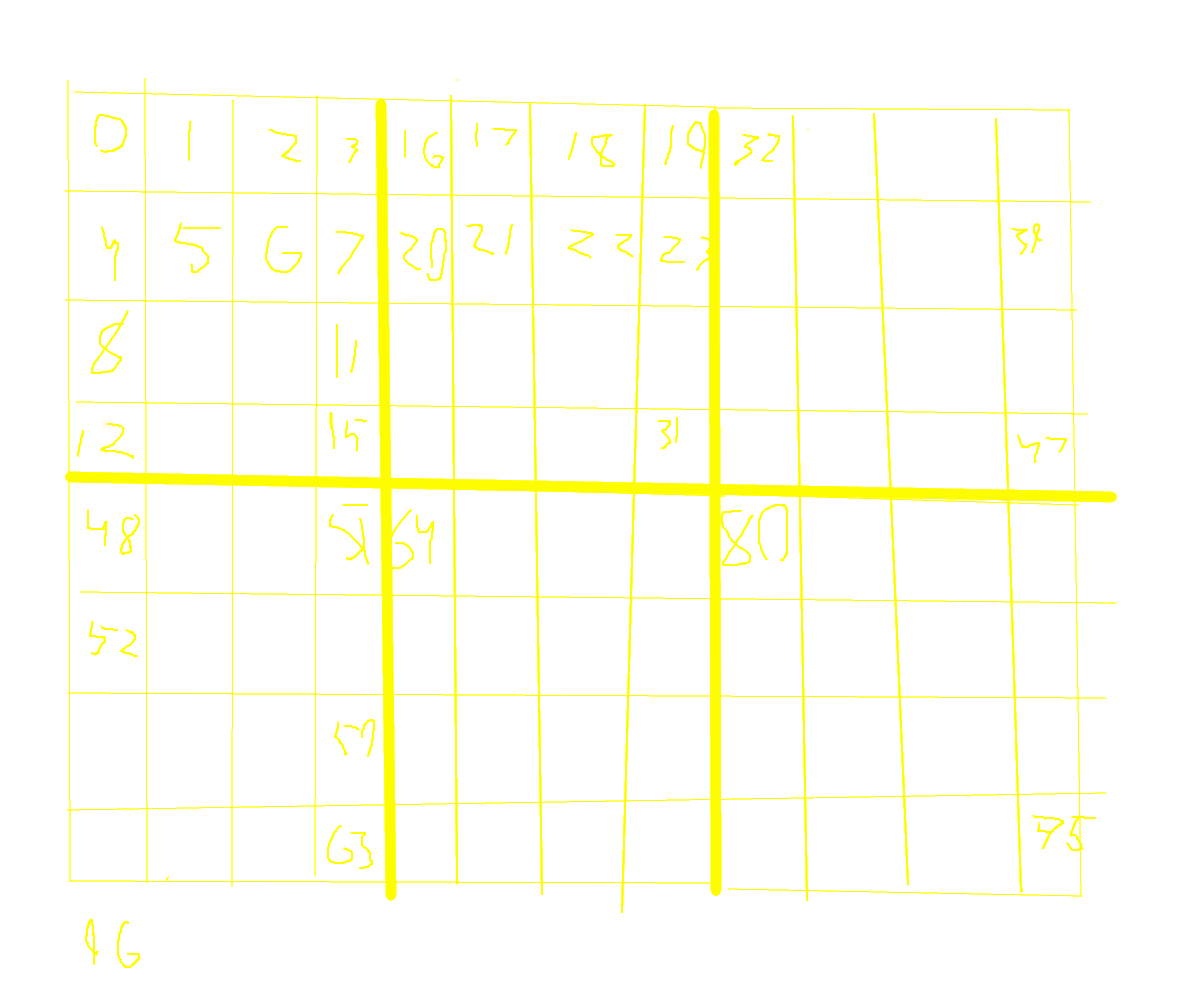

Memory

The ways in which I use memory have definitely gotten more.. pronounced, to say the least, but I’m not sure what to make of it or how to explain it. I don’t use any of C++’s dynamic things or even malloc; right now I just make one OS call at the beginning to get a gigabyte of memory and partition the data myself with five “tables” that are separated by data type and longevity. All of them store variable-size data (but some also have simple single-size arrays), typically in index order with no space between pieces of data, meaning I have to shift parts of the table forward/backward when the size of something changes or something gets deleted.

-

F_Data - Starts with a file_data struct, followed by file_layer structs on 256-byte boundaries that have variable-size mask and effect info in them. A bit unwieldy to work with, as you have to iterate over all the effects and masks to calculate sizes and offsets, and the memory order is different from the on-screen order.

-

F_BitmapData - All of the source bitmap data, uncompressed with no padding between them. Indexed by simply iterating over the file_source structs that come before the bitmap you want in file_data.

-

P_State - Misc allocations that persist, primarily a buffer for the brush stamp and a buffer for the viewport bitmap data (because creating/destroying a GL texture at a new address every frame isn’t very performant). The project_state struct is also allocated here instead of on the main stack, to make it fit together with the rest of the undo system.

-

P_UndoBuffer - Contains the data of actions in the history_entry_list struct that is accessed similar to F_BitmapData. Since undone actions can be redone it has to have two “playheads,” one to measure the true end of the buffer and one for how many undos deep you are.

-

B_ScratchSpace - Indexed by a simple playhead (aka offset value) that you increment when you want to push/pop. Identical to a ‘stack.’

It’s surely more work to maintain variable-size memory systems as opposed to the fixed-size that I primarily used in real2d, but it wasn’t as hard/buggy as I initially thought. Maybe I need to have more experience and let more users screw around to see the real horrors of it. Looking back I’d definitely change F_Data back to being fixed-size. Instead of assigning 50 effect and mask structs per layer like I did in real2d; however, I now know I can have a separate array of effects and masks that the layers just hold indices into.

The F_BitmapData table is probably the most important, since it’s gonna hold the most data. New bitmaps are simply tacked onto the end, and all bitmaps in a project file must be able to fit in RAM at any given time uncompressed. This limits tight-memory use cases, but it’s nice just being able to take the entire region and save/load it without having to do a bunch of hunting and freeing of old data. It does mean, though, if you have 4 GB of bitmaps and decide you want to resize the one on the bottom, we have to move all 4 GB forward and then back again if the user decides they want to undo.

Tiled versus “traditional” storage

In real2d I spent a fair bit of time playing with alternate storage methods of bitmaps, namely storing them as contiguous 4x4 chunks. The only real benefits this had were allowing AVX2 operations to save up to 4 pixels on a bitmap’s right edge (which tallys up to only being a fraction of a percent of a typical bitmap’s total pixel count) and save a memory lookup on each iteration in the multi-threaded tiled operations. I thought it would be a good idea, but in reality, the cost of having to re-pack each incoming video frame from FFmpeg created a bottleneck, even after trying to alleviate it with SIMD. I’d have to rewrite every decoder in the library for it to potentially be viable.

Nevertheless, there’s a big advantage tiled bitmap storage would give that would be a lot more effective here than in a video editing-type program: fixed-size memory blocks. Instead of having to store contiguous Yheightbytes + X*bytes of data and deal with the above scenario where we have to shift gigabytes of data when stuff gets deleted or cropped (which also incurs a large data penalty to the undo buffer) we can simply remove the blocks and add new ones to fill the holes with no fragmentation. Of course I’d increase the tile size to something larger like 256x256. There wouldn’t even need to be a concept of “cropping” in the first place; when the user paints a tiny area of a 4096x4096 region on a new layer, we only need to tack on blocks and use memory where they actually paint.

There’s an obvious massive drawback with this system, though, and that is it makes interfacing with OpenGL for transformation fairly more complicated since it only works with bitmaps in traditional width*height-array format. For passing the textures to OpenGL we might be able to find a way to not do the tiled->array conversion, but we’d always have to do the array->tiled conversion for every bitmap we get back, assuming we don’t want to have two separate memory models for storing bitmaps. For now I’m just gonna stick with standard arrays.

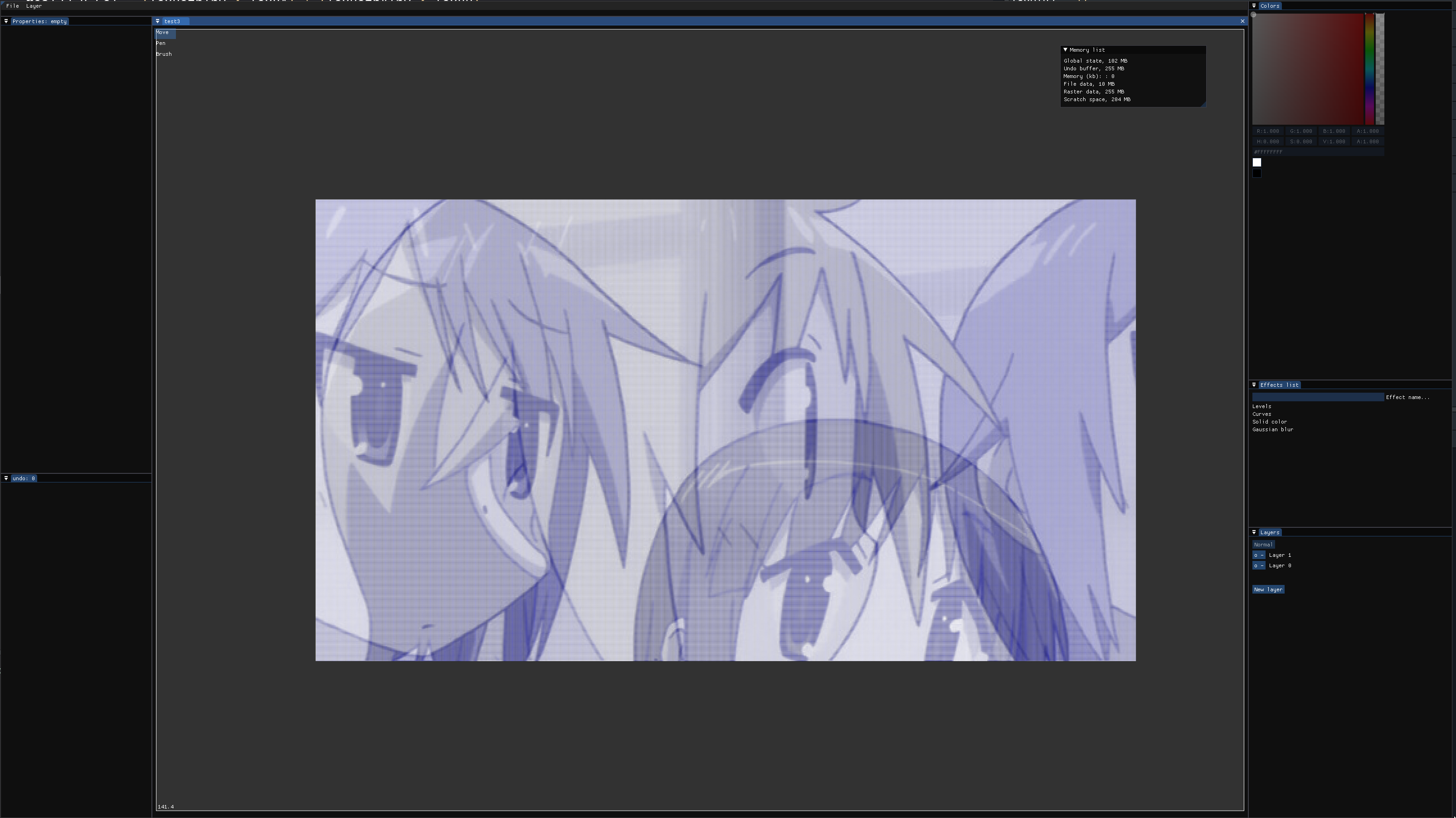

An “interactive mode”

Something that could be interesting to look into in the future would be a stronger separation of the state of changing data and the data itself, a sort of “interactive mode.” Instead of painting directly on the bitmaps and caching the old pixels to a new location, we take the problem in reverse and only commit the stroke to the bitmap once the user is finished. This has the benefit of making it much easier to implement background auto-saving and the above dynamic bitmap cropping if tiled storage is implemented (as well as making opacity on brush strokes behave how you’d expect, allowing a stroke with 40% opacity to maximally hit that 40% instead of sampling each stamp and going past the threshold).

I’ve already implemented a system like this for the shift+T transformation UI and the blending mode picker for layers, where you set a flag in the state and the renderer/UI temporarily takes a proxied set of parameters instead of the original data. I’ll probably be expanding it to work with general-purpose data in the future.

Color spaces and bit depth

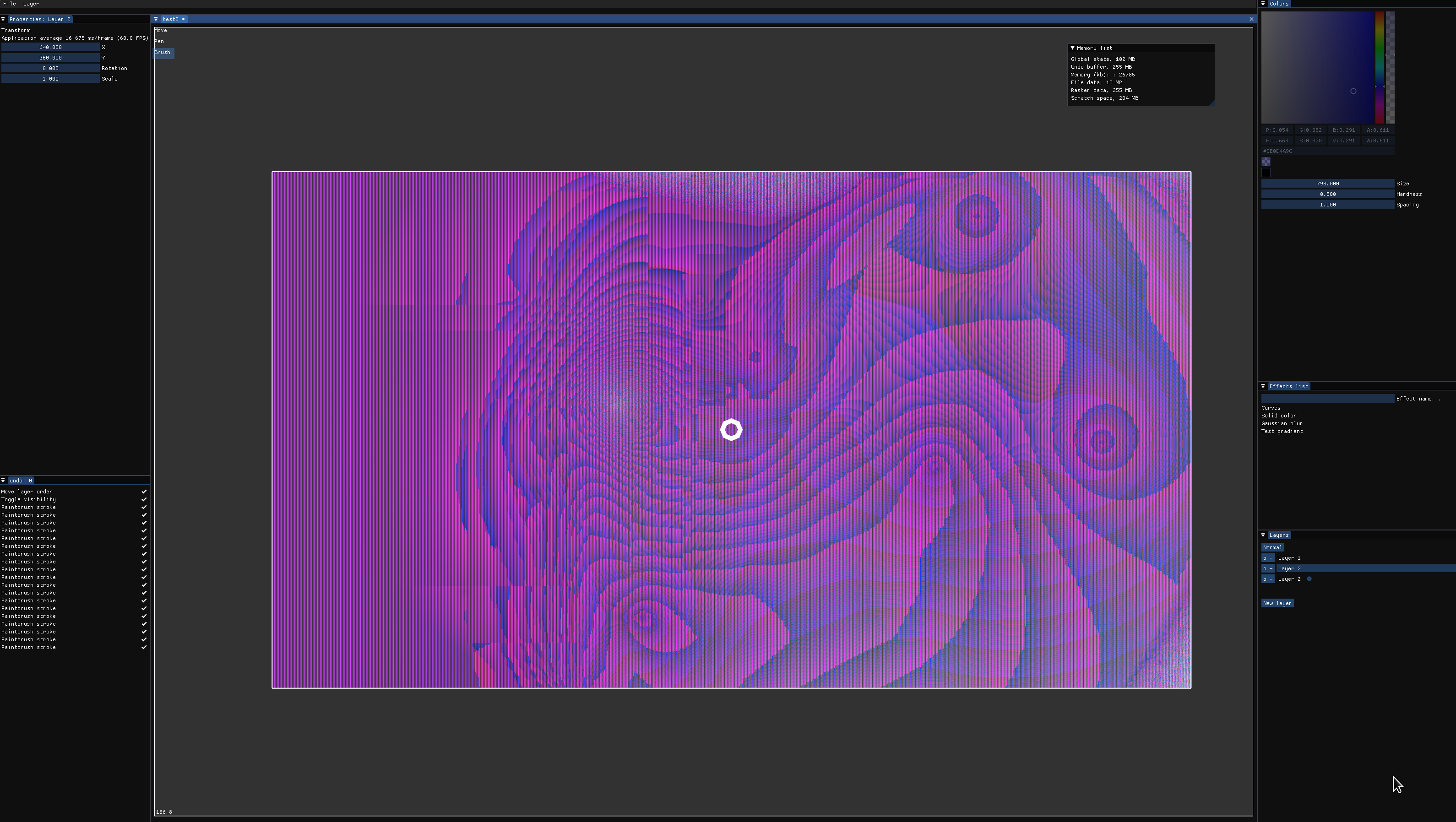

Finally I took the time to get into color spaces, and I’m pretty sure I managed to implement correct linear blending for the rendering. All incoming bitmaps are assumed to be sRGB and gamma-corrected to be linear, and after all operations are complete the framebuffer is converted back to sRGB with OpenGL’s hardware. Had to take a hammer to some of the ImGui code to carve out a path for me to enable the GL_FRAMEBUFFER_SRGB for the viewport texture, since understandably the library isn’t fleshed out in terms of color management.

As I learned; however, “correct” linear blending doesn’t look good when you work in 8 bits per channel, so I bumped up the renderer to be able to do 16 by default. I’m still not sure how to the “perceptual gamma” space in Photoshop and Gimp that they use for 8-bit works, but I’ll probably look into it at some point so low-RAM use cases can be more viable.

Next steps

I’m gonna take what I’ve learned here and resume work on real2d, probably starting with learning the proper way to evaluate Bezier/Cullman-Rom curves with a quadratic equation solver and making a graph editor to surpass AE’s default, followed by writing a fully-fledged OpenGL renderer.